AWS SageMaker: 7 Powerful Reasons to Use This Ultimate ML Tool

Ever wondered how companies like Netflix or Amazon build smart recommendation engines? The secret often lies in AWS SageMaker — a fully managed service that makes machine learning accessible, scalable, and efficient for developers and data scientists alike.

What Is AWS SageMaker and Why It Matters

AWS SageMaker is Amazon’s answer to the growing complexity of machine learning (ML) workflows. It’s a fully managed service that enables developers and data scientists to build, train, deploy, and monitor machine learning models at scale. Unlike traditional ML development, which requires managing infrastructure, setting up environments, and handling deployment pipelines manually, AWS SageMaker simplifies the entire process from data preparation to model inference.

Core Definition and Purpose

At its core, AWS SageMaker is designed to eliminate the heavy lifting involved in machine learning. It provides a unified environment where users can access notebooks, training resources, deployment endpoints, and monitoring tools—all within a single platform. This integration drastically reduces the time it takes to go from idea to production.

- Enables end-to-end ML lifecycle management

- Supports popular frameworks like TensorFlow, PyTorch, and Scikit-learn

- Offers pre-built algorithms for common use cases

Who Uses AWS SageMaker?

The platform caters to a wide audience: from beginner data scientists experimenting with ML to enterprise teams deploying models across global applications. Startups use it to prototype quickly without investing in infrastructure, while large organizations leverage its scalability and security features for mission-critical systems.

For example, Zoetis, a global animal health company, uses AWS SageMaker to accelerate drug discovery by analyzing vast biological datasets. Similarly, Intuit leverages SageMaker to power real-time financial predictions for millions of customers.

“AWS SageMaker allows us to focus on innovation rather than infrastructure.” — AWS Customer Testimonial

Key Features That Make AWS SageMaker Stand Out

One of the biggest advantages of AWS SageMaker is its comprehensive suite of built-in tools that cover every stage of the machine learning pipeline. Let’s break down the most impactful features that set it apart from other ML platforms.

Jupyter Notebook Integration with SageMaker Studio

SageMaker Studio is the web-based IDE for machine learning, offering a visual interface to manage your entire workflow. It integrates Jupyter notebooks seamlessly, allowing you to write code, visualize data, and track experiments—all in one place.

- Real-time collaboration between team members

- Automatic versioning of notebooks via Git integration

- Drag-and-drop pipeline creation for non-coders

You can launch a notebook instance in seconds, choose from various instance types (like ml.t3.medium for light tasks or ml.p3.8xlarge for GPU-heavy workloads), and start coding immediately.

Automatic Model Training and Hyperparameter Optimization

Training a model isn’t just about feeding data—it’s about finding the right parameters. AWS SageMaker includes Automatic Model Tuning (also known as hyperparameter tuning), which uses Bayesian optimization to test different combinations and find the best-performing model.

- Define ranges for hyperparameters (e.g., learning rate, batch size)

- SageMaker runs multiple training jobs in parallel

- Selects the top-performing model based on your metric (e.g., accuracy, F1 score)

This feature alone can save weeks of manual experimentation and significantly improve model performance.

Built-in Algorithms and Pre-Trained Models

AWS SageMaker comes with a library of optimized, built-in algorithms such as XGBoost, Linear Learner, K-Means, and Object2Vec. These are pre-packaged Docker containers that run efficiently on AWS infrastructure.

- No need to write low-level code for common tasks

- Optimized for performance and cost

- Can be used directly or fine-tuned with custom data

Additionally, SageMaker JumpStart provides access to hundreds of pre-trained models for tasks like image classification, text summarization, and fraud detection—many powered by large language models (LLMs).

How AWS SageMaker Simplifies the Machine Learning Lifecycle

The machine learning lifecycle consists of several stages: data preparation, model training, evaluation, deployment, and monitoring. AWS SageMaker streamlines each of these phases, reducing both time and technical debt.

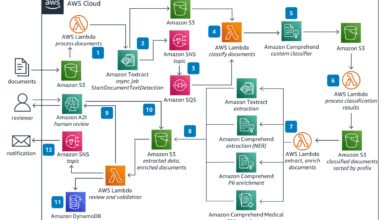

Data Preparation with SageMaker Data Wrangler

Data is the foundation of any ML project, but cleaning and transforming raw data can consume up to 80% of a data scientist’s time. SageMaker Data Wrangler reduces this burden by offering a visual interface to import, clean, transform, and visualize data.

- Connect to data sources like S3, Redshift, or databases

- Apply transformations (e.g., normalization, encoding) with point-and-click tools

- Generate Python or PySpark code automatically for reproducibility

Once processed, the data can be exported directly into SageMaker training jobs or saved back to S3 for future use.

Model Training and Distributed Computing

Training deep learning models often requires significant computational power. AWS SageMaker supports distributed training across multiple GPUs or instances, making it possible to train large models faster.

- Use SageMaker’s managed training jobs with custom scripts

- Leverage Pipe mode to stream data from S3 during training

- Enable Spot Instances to reduce training costs by up to 90%

For example, training a BERT-based NLP model on a large dataset might take days on a single machine, but with SageMaker’s distributed training, it can be completed in hours.

Model Deployment and Real-Time Inference

Once a model is trained, deploying it into production is often a bottleneck. AWS SageMaker makes deployment seamless with one-click model hosting.

- Deploy models as RESTful endpoints for real-time predictions

- Scale automatically based on traffic using Elastic Inference

- Supports A/B testing with multiple model variants

You can also deploy models to edge devices using SageMaker Edge Manager, enabling offline inference on IoT devices.

Advanced Capabilities: SageMaker Pipelines and MLOps

As organizations scale their ML operations, managing workflows becomes critical. AWS SageMaker introduces MLOps (Machine Learning Operations) practices through SageMaker Pipelines, Model Registry, and Monitoring tools.

SageMaker Pipelines for CI/CD in ML

SageMaker Pipelines is a fully managed service for creating, automating, and managing ML workflows. It supports continuous integration and continuous delivery (CI/CD) for machine learning, similar to DevOps in software engineering.

- Define pipelines using Python SDK or JSON templates

- Automate steps: data ingestion → preprocessing → training → evaluation → deployment

- Trigger pipelines via AWS CodePipeline or EventBridge

This ensures consistency, traceability, and repeatability across ML projects.

Model Registry and Version Control

The SageMaker Model Registry acts as a central repository for all trained models. Each model version is tagged with metadata, including training job details, performance metrics, and approval status.

- Enforce governance with approval workflows

- Compare model versions side-by-side

- Integrate with third-party tools via APIs

This is especially useful in regulated industries like finance or healthcare, where audit trails are mandatory.

Monitoring and Drift Detection

Models can degrade over time due to concept drift (changes in data patterns). AWS SageMaker provides Model Monitor to detect such issues automatically.

- Schedule regular monitoring jobs

- Receive alerts when data distribution shifts

- Visualize metrics in Amazon CloudWatch

For instance, a credit scoring model may start performing poorly if economic conditions change—Model Monitor helps catch this early.

Security, Compliance, and Governance in AWS SageMaker

Security is a top priority when dealing with sensitive data in machine learning. AWS SageMaker integrates deeply with AWS’s security ecosystem to ensure data protection and regulatory compliance.

Encryption and Access Control

All data in SageMaker is encrypted at rest using AWS Key Management Service (KMS) and in transit using TLS. You can control access using IAM roles and policies.

- Assign granular permissions (e.g., who can create endpoints?)

- Use VPCs to isolate notebook instances and training jobs

- Enable logging via AWS CloudTrail for audit purposes

Compliance with Industry Standards

AWS SageMaker complies with major standards such as GDPR, HIPAA, SOC 2, and PCI DSS. This makes it suitable for use in healthcare, finance, and government sectors.

- Store PHI data securely with HIPAA eligibility

- Meet data residency requirements using AWS Regions

- Generate compliance reports via AWS Artifact

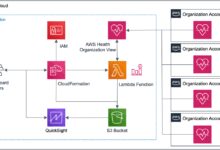

Audit Trails and Operational Visibility

Every action in SageMaker—whether launching a notebook or deploying a model—is logged and traceable. This transparency is crucial for internal audits and regulatory reviews.

- Track user activity via CloudTrail

- Monitor resource usage with Cost Explorer

- Set up alarms for unusual behavior

Cost Management and Pricing Models for AWS SageMaker

Understanding the cost structure of AWS SageMaker is essential for budgeting and optimization. The service follows a pay-as-you-go model, charging only for what you use.

Breakdown of SageMaker Pricing Components

The total cost depends on several factors:

- Notebook Instances: Hourly rate based on instance type (e.g., $0.10/hour for ml.t3.medium)

- Training Jobs: Based on instance type and duration (e.g., $4.60/hour for ml.p3.8xlarge)

- Hosting/Inference: Per-second billing for endpoint usage (e.g., $0.12/hour for ml.m5.large)

- Storage: S3 costs for data and model artifacts

You can estimate costs using the AWS Pricing Calculator.

Ways to Reduce AWS SageMaker Costs

While powerful, SageMaker can become expensive if not managed properly. Here are proven strategies to cut costs:

- Use Spot Instances for training (up to 90% discount)

- Stop notebook instances when not in use (auto-shutdown scripts available)

- Use multi-model endpoints to serve multiple models from a single instance

- Leverage serverless inference for low-latency, variable workloads

For example, a company running nightly batch predictions can schedule training jobs during off-peak hours using AWS Lambda and EventBridge.

Free Tier and Trial Options

AWS offers a free tier for new users, including:

- 250 hours of t2.medium or t3.medium notebook instances per month for 2 months

- 60 hours of ml.t3.medium for training and inference per month for 2 months

- Free access to SageMaker Studio and built-in algorithms

This allows developers to experiment risk-free before scaling up.

Real-World Use Cases of AWS SageMaker

AWS SageMaker isn’t just theoretical—it’s being used by real companies to solve real problems. Let’s explore some impactful applications across industries.

Fraud Detection in Financial Services

Banks and fintech companies use SageMaker to detect fraudulent transactions in real time. By training models on historical transaction data, they can flag suspicious activities with high accuracy.

- Use XGBoost or Random Cut Forest algorithms

- Deploy models as low-latency endpoints

- Update models daily with new fraud patterns

For example, Klarna uses SageMaker to analyze millions of transactions and reduce false positives in fraud detection.

Predictive Maintenance in Manufacturing

Manufacturers use SageMaker to predict equipment failures before they happen. Sensors collect data on temperature, vibration, and pressure, which is fed into ML models to forecast breakdowns.

- Use time-series forecasting models like DeepAR

- Integrate with IoT Core for real-time data ingestion

- Trigger maintenance workflows via AWS Step Functions

This reduces downtime and maintenance costs significantly.

Personalized Recommendations in E-Commerce

Online retailers like Amazon use collaborative filtering and deep learning models to recommend products. SageMaker enables them to train and deploy these models at scale.

- Use Factorization Machines or Neural Collaborative Filtering

- Update recommendations in near real-time

- Run A/B tests to measure conversion impact

The result? Higher customer engagement and increased sales.

Getting Started with AWS SageMaker: Step-by-Step Guide

Ready to dive in? Here’s a practical guide to launching your first project on AWS SageMaker.

Step 1: Set Up Your AWS Account and IAM Permissions

First, create an AWS account if you don’t have one. Then, set up an IAM role with the necessary permissions for SageMaker.

- Navigate to IAM Console

- Create a role with

AmazonSageMakerFullAccesspolicy - Attach S3 bucket policies for data access

Step 2: Launch SageMaker Studio or Notebook Instance

Go to the SageMaker console and choose between SageMaker Studio (recommended) or a classic notebook instance.

- In SageMaker Studio, click “Launch Studio”

- Choose an execution role and instance type

- Open JupyterLab interface

Step 3: Load and Explore Data

Upload your dataset to an S3 bucket or connect directly using boto3.

import sagemaker

sagemaker_session = sagemaker.Session()

bucket = sagemaker_session.default_bucket()Use pandas or Data Wrangler to explore and clean the data.

Step 4: Train a Model Using Built-in Algorithm

For this example, let’s use XGBoost to classify customer churn.

from sagemaker.xgboost import XGBoost

xgb = XGBoost(entry_point='train.py', role=role, instance_count=1, instance_type='ml.m5.large', framework_version='1.5-1')Start the training job and monitor progress in the console.

Step 5: Deploy and Test the Model

Once training completes, deploy the model as an endpoint.

xgb.deploy(initial_instance_count=1, instance_type='ml.m5.large')Send test data using the predictor object and evaluate results.

“The fastest way to learn SageMaker is by doing. Start small, iterate fast.” — AWS Developer Guide

Comparison: AWS SageMaker vs. Other ML Platforms

How does AWS SageMaker stack up against competitors like Google Cloud AI Platform, Azure Machine Learning, and open-source tools like Kubeflow?

SageMaker vs. Google Cloud Vertex AI

Both platforms offer managed notebooks, training, and deployment. However, SageMaker has a more mature ecosystem with deeper integration across AWS services.

- SageMaker offers more built-in algorithms

- Vertex AI has stronger AutoML capabilities

- SageMaker Studio provides better visual workflow tools

Source: Google Cloud Blog

SageMaker vs. Azure Machine Learning

Azure ML excels in enterprise integration with Microsoft products, while SageMaker leads in scalability and flexibility.

- SageMaker supports more instance types and accelerators

- Azure ML has better support for ONNX and Windows environments

- SageMaker Pipelines are more robust than Azure Pipelines

SageMaker vs. Open-Source (Kubeflow, MLflow)

Open-source tools offer flexibility but require significant DevOps effort. SageMaker abstracts away infrastructure management, making it ideal for teams without dedicated ML engineers.

- Kubeflow requires Kubernetes expertise

- MLflow is great for tracking but lacks built-in deployment

- SageMaker provides full lifecycle support out of the box

What is AWS SageMaker used for?

AWS SageMaker is used to build, train, and deploy machine learning models at scale. It supports the entire ML lifecycle, from data preparation to monitoring in production. Common use cases include fraud detection, predictive maintenance, recommendation engines, and natural language processing.

Is AWS SageMaker free to use?

AWS SageMaker offers a free tier for new users, including 250 hours of notebook instances and 60 hours of training/inference per month for two months. After that, it operates on a pay-as-you-go pricing model based on resource usage.

Do I need to know coding to use SageMaker?

While SageMaker is developer-friendly and works best with Python, it also offers visual tools like Data Wrangler and Autopilot that require minimal coding. Beginners can start with pre-built algorithms and gradually learn to write custom scripts.

How does SageMaker handle model security?

SageMaker encrypts data at rest and in transit, integrates with IAM for access control, and supports VPC isolation. It also complies with standards like HIPAA, GDPR, and SOC 2, making it suitable for regulated industries.

Can I deploy SageMaker models outside AWS?

Yes. You can export models in formats like ONNX or TensorFlow SavedModel and deploy them on-premises or on other clouds. Alternatively, use SageMaker Edge Manager to run models on edge devices.

AWS SageMaker has redefined how organizations approach machine learning by offering a unified, scalable, and secure platform. From startups to Fortune 500 companies, it empowers teams to innovate faster and deploy models with confidence. Whether you’re just starting out or scaling ML across your enterprise, SageMaker provides the tools, automation, and ecosystem to succeed. The future of machine learning isn’t just about algorithms—it’s about accessibility, and AWS SageMaker is leading the charge.

Recommended for you 👇

Further Reading: