AWS Athena: 7 Powerful Insights You Must Know in 2024

Imagine querying massive datasets in seconds—without managing servers. That’s the magic of AWS Athena. A serverless query service that lets you analyze data directly from S3 using standard SQL. Simple, fast, and powerful.

What Is AWS Athena and How Does It Work?

AWS Athena is a serverless query service developed by Amazon Web Services (AWS) that allows users to analyze data stored in Amazon S3 using standard SQL. Unlike traditional data warehousing solutions, Athena requires no infrastructure setup, cluster management, or server provisioning. It operates on a pay-per-query model, making it cost-effective for organizations of all sizes.

When you submit a query in Athena, it automatically executes the SQL statement against the data stored in your S3 buckets. The service leverages Presto, an open-source distributed SQL query engine, under the hood to process queries efficiently. Athena supports various data formats, including CSV, JSON, Apache Parquet, and Apache ORC, and integrates seamlessly with AWS Glue for metadata management.

Serverless Architecture Explained

One of the defining features of AWS Athena is its serverless nature. This means users don’t need to provision, scale, or manage any servers. AWS handles all the backend infrastructure, including compute resources, load balancing, and fault tolerance. You simply write SQL queries, and Athena runs them on-demand.

This architecture eliminates the need for database administrators to manage clusters or optimize performance manually. It also reduces operational overhead and allows developers and analysts to focus on data analysis rather than infrastructure maintenance.

- No servers to manage or patch

- Automatic scaling based on query complexity and data volume

- Zero downtime for maintenance or upgrades

“Athena enables organizations to shift from infrastructure-heavy analytics to insight-driven decision-making.” — AWS Official Blog

Integration with Amazon S3

AWS Athena is deeply integrated with Amazon Simple Storage Service (S3), which serves as the primary data lake for most AWS users. Data stored in S3 can be queried directly using Athena without the need to load it into a separate database system.

This tight integration allows for cost-effective storage and high scalability. You can store petabytes of data in S3 and query only the portions needed, reducing both cost and latency. Athena uses partitioning and compression techniques to optimize query performance and minimize data scanned.

For example, if you have log files organized by date in S3 (e.g., s3://my-logs/year=2024/month=04/day=05/), Athena can use partitioning to scan only relevant folders, significantly reducing execution time and cost.

Key Features That Make AWS Athena Stand Out

AWS Athena offers a suite of features that make it a preferred choice for modern data analytics. From its support for standard SQL to advanced data format optimizations, these capabilities empower users to extract insights quickly and efficiently.

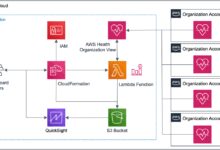

Its seamless integration with other AWS services like AWS Glue, Lambda, and QuickSight enhances its functionality, enabling end-to-end data workflows without leaving the AWS ecosystem.

Support for Standard SQL

Athena supports ANSI SQL, allowing analysts and developers familiar with SQL to get started immediately. Whether you’re filtering records, joining tables, or aggregating data, the syntax remains consistent with traditional relational databases.

This compatibility reduces the learning curve and enables easy migration of existing SQL-based reporting tools. You can connect BI tools like Tableau, Looker, or Power BI directly to Athena using JDBC or ODBC drivers.

- Familiar SQL syntax for SELECT, JOIN, WHERE, GROUP BY, etc.

- Support for complex queries including subqueries and CTEs (Common Table Expressions)

- Full-text search capabilities via regular expressions

Data Format Optimization

Athena supports multiple data formats, but performance varies significantly depending on the format used. Columnar formats like Apache Parquet and ORC are highly optimized for analytical queries because they store data by column rather than row, reducing I/O and improving compression.

For instance, if you only need to query the ‘revenue’ column from a 100-column dataset, Parquet will read only that column instead of scanning the entire row. This leads to faster query execution and lower costs since you’re charged based on the amount of data scanned.

AWS recommends converting raw CSV or JSON files into Parquet or ORC using AWS Glue ETL jobs for optimal performance. This transformation can reduce query costs by up to 90% in some cases.

“Using Parquet with Athena reduced our monthly query costs by 85%.” — Tech Lead, SaaS Analytics Company

Setting Up Your First Query in AWS Athena

Getting started with AWS Athena is straightforward. Within minutes, you can configure your environment and run your first query. The process involves creating a database, defining a table schema, and executing a SQL query against data stored in S3.

Before diving in, ensure you have the necessary IAM permissions and that your data is properly organized in S3 with clear naming conventions and folder structures.

Step-by-Step Setup Guide

1. Open the AWS Management Console and navigate to the Athena service.

2. Set up a query result location in S3 where Athena will store output files.

3. Create a new database using the CREATE DATABASE command.

4. Define a table schema using CREATE TABLE with the appropriate data types and location in S3.

5. Run a simple SELECT * FROM table_name LIMIT 10; to verify the setup.

For example, if you have JSON logs stored at s3://my-app-logs/prod/, you can define a table like this:

CREATE EXTERNAL TABLE IF NOT EXISTS logs_json (

timestamp STRING,

level STRING,

message STRING

)

ROW FORMAT SERDE 'org.openx.data.jsonserde.JsonSerDe'

LOCATION 's3://my-app-logs/prod/';

After creation, querying becomes as simple as running standard SQL.

Best Practices for Schema Design

Designing an effective schema is crucial for performance and cost efficiency. Use descriptive column names and appropriate data types (e.g., INT instead of STRING for numeric fields). Partition large datasets by date, region, or category to limit the amount of data scanned.

For example, partitioning by year, month, and day allows Athena to skip irrelevant partitions during query execution. You can also use bucketing for high-cardinality fields to further optimize performance.

- Always specify the correct SerDe (Serializer/Deserializer) for non-Parquet formats

- Use partition projection to automate partition management

- Avoid overly nested JSON structures that increase parsing overhead

Performance Optimization Techniques in AWS Athena

While AWS Athena is designed for speed and simplicity, performance can vary based on data structure, query design, and configuration. Optimizing these elements ensures faster results and lower costs.

Since Athena charges based on the volume of data scanned per query, reducing unnecessary data access is key to cost control.

Partitioning Strategies

Partitioning is one of the most effective ways to improve query performance. By organizing data into directories based on specific attributes (like date or region), Athena can skip entire folders that don’t match the query filter.

For example, if your query filters by date = '2024-04-05', Athena will only scan files in the corresponding partition folder instead of scanning all historical data.

Dynamic partitioning can be managed manually or automated using AWS Glue crawlers. Additionally, Athena supports partition projection, which eliminates the need to manually add partitions when new data arrives.

Learn more about partitioning in the official AWS documentation.

Using Columnar Formats (Parquet & ORC)

As previously mentioned, columnar storage formats like Parquet and ORC significantly enhance query performance. They compress data more efficiently and allow Athena to read only the columns needed for a query.

Converting your raw data into Parquet can be done using AWS Glue ETL jobs, Spark on EMR, or even Lambda functions for smaller datasets. Once converted, you’ll notice faster query times and reduced costs.

For example, a 1 TB CSV file might compress down to 200 GB in Parquet format, and if your query only accesses 5 out of 50 columns, Athena scans just ~20 GB instead of the full 1 TB.

- Convert CSV/JSON to Parquet using AWS Glue

- Apply Snappy or GZIP compression for additional savings

- Use partitioning + columnar format together for maximum efficiency

Security and Access Control in AWS Athena

Security is a top priority when dealing with sensitive data. AWS Athena integrates with AWS Identity and Access Management (IAM), AWS Lake Formation, and encryption mechanisms to ensure secure data access and compliance.

Organizations can enforce fine-grained access controls, audit query activity, and protect data at rest and in transit.

IAM Policies and Fine-Grained Permissions

You can control who can run queries, access specific databases or tables, and manage workgroups using IAM policies. For example, you can restrict a user to only query the sales database and prevent them from dropping tables or accessing PII (Personally Identifiable Information).

Here’s an example IAM policy snippet:

{

"Effect": "Allow",

"Action": [

"athena:StartQueryExecution",

"athena:GetQueryResults"

],

"Resource": "arn:aws:athena:us-east-1:123456789012:workgroup/analysts"

}

This grants permission to execute queries only within the ‘analysts’ workgroup.

Data Encryption and Compliance

AWS Athena supports encryption of query results stored in S3 using AWS Key Management Service (KMS) or S3-managed keys (SSE-S3). This ensures that even if someone gains unauthorized access to the S3 bucket, they cannot read the output files without decryption keys.

Additionally, data queried from S3 should also be encrypted. Athena reads data as-is, so enabling default encryption on your S3 buckets is a best practice.

- Enable SSE-S3 or SSE-KMS on S3 buckets

- Use AWS Lake Formation for centralized data governance

- Log all Athena queries via AWS CloudTrail for auditing

“Security isn’t an afterthought—it’s built into every layer of AWS Athena.” — AWS Security Whitepaper

Cost Management and Pricing Model of AWS Athena

Understanding AWS Athena’s pricing model is essential for budgeting and cost optimization. Athena uses a pay-per-query model, charging $5 per terabyte of data scanned. This means you only pay for what you use, with no upfront costs or minimum fees.

However, inefficient queries that scan large volumes of unoptimized data can lead to unexpectedly high bills. Therefore, cost management should be a core part of your Athena strategy.

How Athena Pricing Works

The primary cost factor in AWS Athena is the amount of data scanned per query. If a query scans 100 GB of data, the cost is $0.50 (since $5/TB = $0.005/GB). Query execution time and storage of results are free, but storing source data in S3 incurs standard S3 charges.

Additional costs may arise from using AWS Glue for catalog management or data conversion, but these are separate from Athena’s core pricing.

- Minimum charge per query: 10 MB

- No cost for failed queries

- Free storage of query results (but S3 charges apply)

Tips to Reduce Athena Costs

To keep costs under control, follow these proven strategies:

– Convert data to columnar formats (Parquet/ORC)

– Implement partitioning to reduce scanned data

– Use compressed formats (Snappy, GZIP)

– Limit result sets with LIMIT during exploration

– Monitor query history and identify expensive queries

You can also set up cost alerts using AWS Budgets and track spending through Cost Explorer.

Check the latest pricing details at AWS Athena Pricing Page.

Real-World Use Cases of AWS Athena

AWS Athena is not just a theoretical tool—it’s actively used across industries for real-world data analysis. From log analytics to business intelligence, its flexibility and speed make it ideal for a wide range of applications.

Organizations leverage Athena to gain insights without building complex data pipelines or maintaining expensive data warehouses.

Log and Event Data Analysis

One of the most common use cases is analyzing application, server, and VPC flow logs. Companies store logs in S3 and use Athena to query them for troubleshooting, security audits, or performance monitoring.

For example, a DevOps team can run queries to find all error-level logs from a specific service over the past 24 hours:

SELECT * FROM app_logs

WHERE level = 'ERROR'

AND timestamp >= current_date - interval '1' day;

This helps identify issues quickly without needing a dedicated logging platform like Splunk.

Business Intelligence and Reporting

With integration into BI tools like Amazon QuickSight, Tableau, and Looker, Athena serves as a powerful backend for dashboards and reports. Analysts can build interactive visualizations directly on top of S3 data lakes.

Retail companies, for instance, use Athena to analyze sales trends, customer behavior, and inventory levels by querying transaction data stored in Parquet format.

- Generate daily sales reports

- Analyze customer segmentation

- Track marketing campaign performance

Common Challenges and How to Overcome Them

Despite its advantages, AWS Athena comes with certain limitations and challenges. Understanding these pitfalls and knowing how to address them is crucial for long-term success.

From query latency to schema evolution issues, proactive planning can mitigate most problems.

Query Latency and Timeout Issues

While Athena is fast for most queries, complex joins or large scans can take minutes to complete. Queries time out after 30 minutes by default, which can be problematic for heavy analytical workloads.

To overcome this, optimize queries by filtering early, using partitioning, and avoiding SELECT *. Also, consider increasing the timeout limit in workgroup settings or breaking large queries into smaller chunks.

Schema Evolution and Data Consistency

When source data changes (e.g., new JSON fields added), the Athena table schema may become outdated. This can lead to query errors or missing data.

Solutions include:

– Using AWS Glue crawlers to automatically update the schema

– Implementing schema validation in data ingestion pipelines

– Leveraging OpenTable Format (like Apache Iceberg) for better schema evolution support (available in Athena Engine Version 3)

AWS recently introduced Apache Iceberg support in Athena, enabling better schema management and time travel queries.

What is AWS Athena used for?

AWS Athena is used to run SQL queries on data stored in Amazon S3 without needing to manage servers. It’s commonly used for log analysis, business intelligence, ad-hoc querying, and data exploration in data lakes.

Is AWS Athena free to use?

AWS Athena is not free, but it follows a pay-per-query model at $5 per terabyte of data scanned. There is no upfront cost, and you only pay for the data your queries scan. The first 1 TB per month is free under the AWS Free Tier.

How does AWS Athena differ from Amazon Redshift?

Athena is serverless and ideal for ad-hoc queries on S3 data, while Redshift is a fully managed data warehouse for complex analytics and high-performance workloads. Athena requires no setup, whereas Redshift needs cluster management.

Can I use AWS Athena with non-AWS data sources?

Yes, using Athena Federated Query, you can query data from external sources like RDS, DynamoDB, and on-premises databases through Lambda functions, enabling hybrid data analysis.

Does AWS Athena support streaming data?

Athena itself doesn’t process streaming data in real-time, but you can store streaming data (e.g., from Kinesis Firehose) into S3 and query it shortly after arrival, enabling near-real-time analytics.

Amazon Athena revolutionizes how organizations interact with data in the cloud. By eliminating infrastructure management and supporting standard SQL, it empowers teams to derive insights from S3 data quickly and securely. With proper optimization—like using Parquet, partitioning, and IAM controls—Athena becomes a cost-effective, scalable analytics engine. Whether you’re analyzing logs, generating reports, or exploring big data, AWS Athena offers a powerful, serverless solution that grows with your needs.

Recommended for you 👇

Further Reading: