AWS Glue: 7 Powerful Features You Must Know in 2024

If you’re working with data in the cloud, AWS Glue is a game-changer. This fully managed ETL service simplifies how you prepare and load data for analytics—no servers to manage, no infrastructure to maintain. Let’s dive into why AWS Glue is a must-have in your data toolkit.

What Is AWS Glue and Why It Matters

AWS Glue is a fully managed extract, transform, and load (ETL) service provided by Amazon Web Services. It enables data engineers and analysts to prepare data for analytics with minimal manual intervention. By automating much of the ETL pipeline, AWS Glue reduces the time and effort required to move data from various sources to a data warehouse or data lake.

Core Components of AWS Glue

AWS Glue is built on a few key components that work together seamlessly to deliver a powerful ETL experience. These include the Data Catalog, Crawlers, ETL Jobs, and Glue Studio. Each plays a vital role in the data integration process.

- Data Catalog: Acts as a persistent metadata store, similar to a data dictionary, where table definitions, schema, and data types are stored.

- Crawlers: Automatically scan data sources (like S3, RDS, Redshift) to infer schema and populate the Data Catalog.

- ETL Jobs: These are the workhorses that perform the actual data transformation using Python or Scala scripts.

How AWS Glue Simplifies ETL

Traditional ETL processes require significant setup—servers, clusters, configuration files, and manual scripting. AWS Glue removes that complexity by offering a serverless architecture. You define your data sources and targets, and AWS Glue handles the rest: provisioning resources, running jobs, and scaling automatically.

“AWS Glue automates the heavy lifting of ETL, allowing you to focus on data quality and transformation logic rather than infrastructure.” — AWS Official Documentation

Key Features of AWS Glue

AWS Glue stands out due to its rich set of features designed to streamline data integration. From automatic schema detection to visual job authoring, it offers tools that cater to both technical and non-technical users.

Automatic Schema Discovery with Crawlers

One of the most powerful features of AWS Glue is its ability to automatically detect the schema of your data. Using Crawlers, it scans data stored in Amazon S3, JDBC databases, and other sources, then infers the structure and stores it in the AWS Glue Data Catalog. This eliminates the need for manual schema definition, saving hours of work.

For example, if you have CSV files in an S3 bucket, a Glue Crawler can detect column names, data types (string, integer, timestamp), and even nested structures in JSON or Parquet files. This metadata becomes immediately available for querying via Amazon Athena or for use in ETL jobs.

Serverless ETL Jobs with Dynamic Scaling

AWS Glue runs ETL jobs in a serverless environment, meaning you don’t have to manage clusters or worry about capacity planning. When you trigger a job, AWS Glue automatically provisions the necessary compute resources (using Apache Spark under the hood) and scales them based on data volume.

This dynamic scaling ensures cost efficiency—pay only for the compute time used. Jobs can scale from gigabytes to petabytes without any code changes. You can monitor job performance, logs, and metrics directly in the AWS Management Console or via CloudWatch.

Visual ETL Development with Glue Studio

Not everyone is comfortable writing code. AWS Glue Studio provides a drag-and-drop interface for building ETL pipelines visually. You can select sources, apply transformations (like filtering, joining, or aggregating), and define targets—all without writing a single line of code.

Behind the scenes, Glue Studio generates Python scripts using the AWS Glue PySpark library. This makes it easy to start visually and then customize the script later for advanced logic. It’s ideal for data analysts or business users who want to participate in data preparation.

How AWS Glue Works: The Architecture

Understanding the architecture of AWS Glue helps in designing efficient data pipelines. At its core, AWS Glue follows a metadata-driven approach where the Data Catalog acts as the central repository for all schema information.

Data Catalog: The Heart of AWS Glue

The AWS Glue Data Catalog is more than just a metadata store—it’s a fully managed, scalable, and searchable repository. It’s compatible with Apache Hive Metastore, which means tools like Amazon EMR, Athena, and Redshift Spectrum can query the same metadata.

You can organize your catalog using databases and tables, add custom classifiers for non-standard formats, and even set up access controls using IAM policies. This makes it a secure and collaborative environment for enterprise data governance.

Crawlers and Classifiers

Crawlers use classifiers to determine the format of your data. AWS Glue comes with built-in classifiers for common formats like CSV, JSON, XML, and Parquet. You can also create custom classifiers using Grok patterns for log files or other semi-structured data.

When a crawler runs, it connects to a data store, samples the data, applies the appropriate classifier, and creates or updates table definitions in the catalog. You can schedule crawlers to run periodically to keep metadata up to date as new files arrive.

ETL Jobs and Script Generation

Once the schema is in the catalog, you can create ETL jobs. AWS Glue supports both Python (PySpark) and Scala. When you create a job, Glue automatically generates a script template that reads from the source, applies basic transformations, and writes to the target.

You can enhance this script with custom logic—handling nulls, deduplicating records, or enriching data with external APIs. Jobs can be triggered on a schedule, via events (like S3 uploads), or programmatically using AWS Lambda or Step Functions.

Use Cases for AWS Glue

AWS Glue is versatile and can be applied across various data integration scenarios. Whether you’re building a data lake, migrating databases, or preparing data for machine learning, AWS Glue has a solution.

Building a Data Lake on Amazon S3

One of the most common use cases is building a data lake. Organizations store raw data from multiple sources (logs, databases, APIs) in Amazon S3. AWS Glue Crawlers catalog this data, and ETL jobs clean, transform, and organize it into a structured format (like Parquet or ORC) for efficient querying.

For example, a retail company might use AWS Glue to ingest sales data from POS systems, customer data from CRM, and inventory data from ERP systems—all into a centralized S3 data lake. From there, analysts can use Athena or QuickSight for reporting.

Database Migration and Modernization

When migrating from on-premises databases to AWS, AWS Glue can automate the ETL process. You can use it to extract data from legacy systems (like Oracle or SQL Server via JDBC), transform it to fit a new schema, and load it into Amazon Redshift or Aurora.

Additionally, AWS Glue can help modernize data warehouses by incrementally loading change data capture (CDC) streams using AWS DMS in combination with Glue jobs.

Real-Time Data Processing with Glue Streaming

While AWS Glue is traditionally used for batch processing, it now supports streaming ETL with Glue Streaming. This allows you to process data from Amazon Kinesis or MSK (Managed Streaming for Kafka) in near real-time.

For instance, a financial services firm might use Glue Streaming to detect fraudulent transactions by analyzing payment streams in real time, enriching them with customer profiles, and triggering alerts when anomalies are detected.

Integrations with Other AWS Services

AWS Glue doesn’t work in isolation—it’s designed to integrate seamlessly with other AWS services, forming a robust data ecosystem.

Integration with Amazon S3 and Athena

Amazon S3 is the most common data source and target for AWS Glue. Once Glue catalogs your S3 data, you can query it directly using Amazon Athena, a serverless query service. This combination enables powerful ad-hoc analytics without needing a traditional data warehouse.

For example, after Glue processes and optimizes logs into columnar format, Athena can run SQL queries across petabytes of data in seconds.

Connecting with Amazon Redshift and RDS

AWS Glue can extract data from Amazon RDS (MySQL, PostgreSQL, Oracle, etc.) and load it into Amazon Redshift for analytics. It supports JDBC connections and can handle complex joins and transformations during the ETL process.

You can also use Glue to offload data from Redshift to S3 for long-term storage, helping manage costs while maintaining query performance.

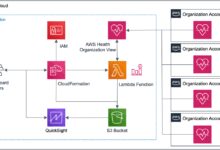

Event-Driven Workflows with Lambda and EventBridge

To build responsive data pipelines, AWS Glue can be triggered by events. For example, when a new file is uploaded to S3, an S3 event can invoke an AWS Lambda function, which then starts a Glue job. Similarly, Amazon EventBridge can schedule jobs or react to system events.

This event-driven architecture enables real-time data processing and reduces latency in data availability.

Performance Optimization in AWS Glue

While AWS Glue is serverless and auto-scales, performance tuning is still essential for cost and efficiency. Several strategies can help you get the most out of your Glue jobs.

Job Bookmarks to Avoid Re-Processing

Job bookmarks are a powerful feature that tracks the state of data processed by a job. This prevents reprocessing the same data when the job runs again—critical for incremental ETL.

For example, if you’re processing daily log files, a job bookmark ensures only new files are processed on subsequent runs. This reduces execution time and cost significantly.

Using Glue Elastic Views for Materialized Views

AWS Glue Elastic Views allows you to create materialized views that combine and replicate data across different data stores. For instance, you can create a view that joins customer data from Aurora with order data from DynamoDB and make it available in Redshift or OpenSearch.

This eliminates the need for complex ETL jobs just to synchronize data and enables real-time analytics across disparate systems.

Partitioning and Compression Strategies

To improve query performance and reduce costs, structure your data with proper partitioning (e.g., by date or region) and use efficient compression formats like Snappy or Gzip. AWS Glue can write partitioned data automatically if you specify the partition keys in your job.

Additionally, converting data from row-based formats (like CSV) to columnar formats (like Parquet or ORC) can drastically improve query speed and reduce storage costs.

Security and Compliance in AWS Glue

Security is a top priority when handling sensitive data. AWS Glue provides multiple layers of protection to ensure your data pipelines are secure and compliant.

Encryption and IAM Policies

AWS Glue supports encryption at rest and in transit. You can enable AWS KMS (Key Management Service) to encrypt data stored in S3 and the Data Catalog. All data transferred between Glue and other services is encrypted using TLS.

IAM roles and policies control who can create, run, or modify Glue jobs and access the Data Catalog. You can apply fine-grained permissions—for example, allowing only specific users to run jobs that access PII data.

VPC and Network Isolation

For enhanced security, you can configure AWS Glue jobs to run inside a Virtual Private Cloud (VPC). This allows you to control network access to your data sources, especially when connecting to on-premises databases via AWS Direct Connect or Site-to-Site VPN.

By placing Glue jobs in a private subnet, you ensure that data never traverses the public internet, meeting strict compliance requirements.

Audit Logging and Monitoring

AWS Glue integrates with AWS CloudTrail and CloudWatch for auditing and monitoring. CloudTrail logs all API calls (like job starts, crawler runs), while CloudWatch captures job metrics, logs, and alarms.

You can set up alerts for job failures, long-running jobs, or high resource usage, enabling proactive issue resolution.

Common Challenges and Best Practices

While AWS Glue is powerful, users often face challenges related to cost, performance, and complexity. Following best practices can help avoid common pitfalls.

Managing Costs with Right-Sizing

Since AWS Glue charges based on Data Processing Units (DPUs), inefficient jobs can become expensive. To optimize costs:

- Use job bookmarks to avoid reprocessing.

- Limit the number of DPUs allocated unless processing large datasets.

- Monitor job duration and optimize scripts for faster execution.

Handling Schema Evolution

Data schemas often change—new columns added, types modified. AWS Glue handles schema evolution gracefully, but you must configure your jobs to adapt. Use the apply_mapping transform to explicitly define field mappings and prevent job failures when schemas change.

Testing and Debugging Glue Jobs

Debugging ETL jobs can be tricky. AWS Glue provides development endpoints and IDE integration (like Jupyter notebooks) to test scripts interactively. You can also enable continuous logging to CloudWatch and use AWS Glue’s built-in job monitoring dashboard.

For complex transformations, break the job into smaller steps and validate each stage independently.

What is AWS Glue used for?

AWS Glue is used for automating extract, transform, and load (ETL) processes in the cloud. It helps clean, transform, and load data from various sources into data lakes, data warehouses, or analytics services like Amazon Redshift, Athena, or QuickSight.

Is AWS Glue serverless?

Yes, AWS Glue is a fully serverless service. It automatically provisions and scales the necessary compute resources (based on Apache Spark) to run ETL jobs, so you don’t have to manage any infrastructure.

How much does AWS Glue cost?

AWS Glue pricing is based on Data Processing Units (DPUs). You pay for the number of DPUs used per hour. Crawlers, ETL jobs, and development endpoints are billed separately. There’s also a free tier for new AWS accounts. For detailed pricing, visit the AWS Glue pricing page.

Can AWS Glue handle real-time data?

Yes, AWS Glue supports streaming ETL through Glue Streaming, which can process data from Amazon Kinesis and MSK in near real-time, enabling real-time analytics and event-driven workflows.

How does AWS Glue compare to AWS Data Pipeline?

AWS Glue is more advanced and fully managed compared to AWS Data Pipeline, which is primarily for orchestrating data movement. Glue offers automated schema discovery, code generation, and built-in ETL capabilities, while Data Pipeline requires more manual setup and scripting.

AWS Glue is a powerful, serverless ETL service that simplifies data integration in the cloud. From automatic schema discovery to visual development and real-time streaming, it offers a comprehensive suite of tools for modern data engineering. By integrating with other AWS services and supporting best practices in security and performance, AWS Glue empowers organizations to build scalable, efficient, and secure data pipelines. Whether you’re building a data lake, migrating databases, or enabling real-time analytics, AWS Glue is a critical component of any cloud data strategy.

Recommended for you 👇

Further Reading: